In the world of applications and servers, two important tools come into play: API Gateways and load balancers. This article will try to answer the very common debate over API Gateway vs. Load Balancer. Let’s break them down into everyday terms to understand the key differences and when to use each one. Get ready for a journey through tech infrastructure!

API Gateways: Your Application Coordinator 🚦

Imagine you’re hosting a big party with different food stations, games, and activities. To keep everything organised, you appoint a coordinator who handles all the requests, ensures everyone follows the rules, and manages the flow of guests. That’s what an API gateway does for your applications! It acts as a coordinator, providing a central entry point for users to access multiple services. It simplifies the process, enhances security, and directs traffic to the right destinations.

Now that we have drawn an analogy, let us understand in technical terms what API Gateway is.

An API Gateway is a server or service that acts as an intermediary between clients and backend services or APIs (Application Programming Interfaces). It provides a centralized entry point for clients to access multiple services or APIs by routing requests, handling authentication, authorization, and performing various tasks to enhance API management and security.

When to Use API Gateways:

- Routing and Request Aggregation:

An API Gateway directs client requests to the appropriate backend services or APIs based on predefined rules.

Example: An e-commerce API Gateway routes requests for product information, user authentication, and order processing to the corresponding microservices. - Protocol Translation:

An API Gateway converts communication protocols between clients and backend services.

Example: A mobile banking API Gateway translates RESTful API requests from mobile apps into SOAP requests understood by the legacy banking system. - Security and Authentication:

An API Gateway ensures secure access to APIs by handling authentication and authorization.

Example: A social media API Gateway validates user access tokens, checks permissions, and allows authorized users to post, like, or share content. - Rate Limiting and Throttling:

An API Gateway enforces usage limits to prevent abuse and control resource allocation.

Example: A weather service API Gateway limits requests to 100 per minute per API key, protecting the backend API from excessive usage. - Caching and Response Management:

An API Gateway caches frequently accessed data and modifies or combines responses as needed.

Example: A news aggregation API Gateway caches popular articles, serving them directly to clients and improving response time. - Logging and Analytics:

An API Gateway captures request logs and provides analytics for monitoring and performance analysis.

Example: A payment processing API Gateway logs requests, measures response times, and generates reports on API usage and trends.

API Gateways streamline API management, enhance security, and improve performance by acting as a central hub for client-server interactions.

Load Balancer

Imagine you’re running a popular restaurant with multiple chefs in the kitchen. To ensure a smooth dining experience, you hire a master expediter who takes incoming orders, assigns them to the available chefs, and ensures everyone gets their food on time. That’s what a load balancer does for your servers! It acts as an expediter, evenly distributing incoming requests across multiple servers to optimize performance and prevent overload.

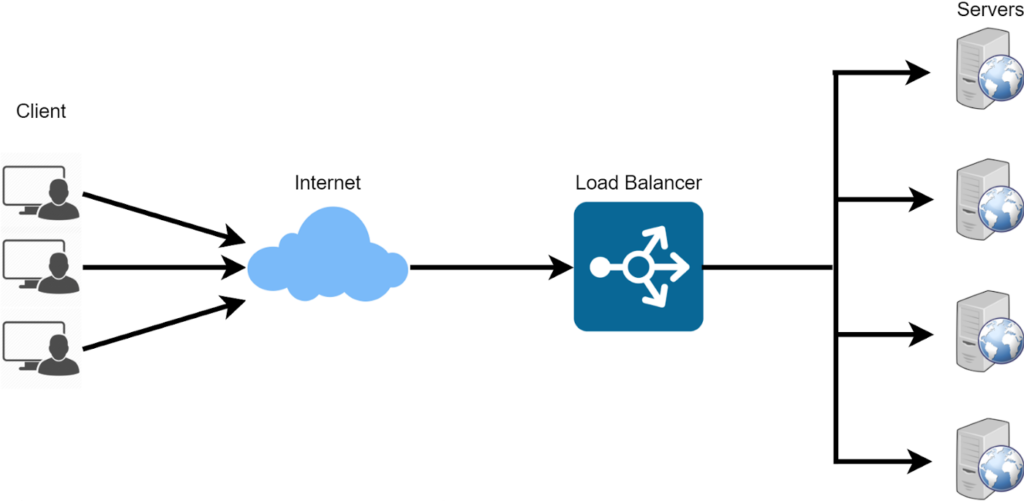

Let us now read about Load Balancer in more technical terms.

A load balancer is a device or software component that distributes incoming network traffic across multiple servers or resources to ensure efficient utilization, high availability, and scalability of the system. Its primary purpose is to evenly distribute the workload among the servers, preventing any single server from becoming overwhelmed and improving overall system performance and reliability.

For example, suppose you have a service with a very high TPS. A single server will not be able to handle all those request, so you spawn multiple servers. And you put a Load Balancer in front of them that is responsible to decide which server will give the response fastest, and routes the request to that server.

Here are some key aspects and functionalities of a load balancer:

- Traffic Distribution: A load balancer receives incoming requests from clients and evenly distributes the traffic across multiple servers or resources based on predefined algorithms and configurations. This helps to prevent any individual server from being overloaded while maximizing the utilization of available resources.

- High Availability: Load balancers enhance the availability of services by continuously monitoring the health and status of backend servers. If a server becomes unresponsive or fails, the load balancer automatically redirects traffic to the remaining healthy servers, ensuring uninterrupted service for clients.

- Scalability and Elasticity: Load balancers facilitate horizontal scalability by allowing additional servers to be added to the server pool easily. As the demand for resources increases, load balancers distribute traffic across the newly added servers, enabling the system to handle higher loads and accommodate growing user demands.

- Session Persistence: Load balancers can maintain session persistence or sticky sessions, ensuring that subsequent requests from the same client are directed to the same backend server. This is important for applications that require session state or need to maintain context during the user’s interaction.

- Health Checks and Monitoring: Load balancers regularly perform health checks on backend servers to ensure they are responsive and able to handle requests. They monitor server performance, detect failures, and dynamically adjust the traffic distribution to route requests only to healthy servers.

- SSL Termination: Load balancers can offload SSL/TLS encryption and decryption, relieving the backend servers from this computationally intensive task. They handle the SSL handshake with clients, encrypt and decrypt traffic, and then forward the requests to the backend servers over unencrypted connections, improving server performance

Choosing the Right Tool:

- Use API gateways when you want to simplify user access, enhance security, and manage the flow of requests within your application.

- Use load balancers when you need to evenly distribute workload, optimize performance, and ensure high availability of your servers.

Using API Gateways and Load Balancers together

When using Load Balancers and API Gateways together, there are two common strategies to consider:

- External Load Balancer with API Gateway:

- In this strategy, an external Load Balancer sits in front of both the API Gateway and the backend servers hosting the APIs.

- The Load Balancer receives incoming client requests and distributes them across multiple instances of the API Gateway.

- The API Gateway then routes the requests to the appropriate backend servers hosting the APIs.

- This strategy allows for load balancing at both the API Gateway level and the backend server level, providing scalability, high availability, and improved performance.

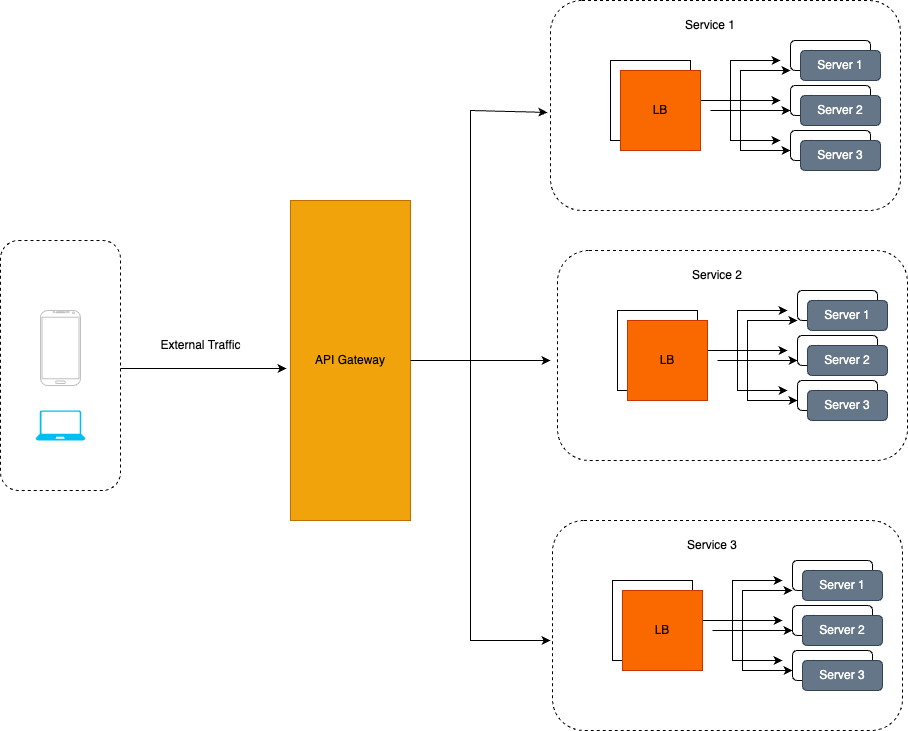

- Internal Load Balancer with API Gateway:

- In this strategy, the Load Balancer is deployed internally within the infrastructure, specifically for load balancing backend servers.

- The API Gateway is responsible for receiving client requests and handling API management functionalities.

- The API Gateway interacts directly with the internal Load Balancer to distribute requests across the backend servers.

- This strategy allows the API Gateway to focus on API management tasks, while the Load Balancer ensures even distribution of traffic among the backend servers.

Pros And Cons of Strategies:

- The “External Load Balancer with API Gateway” strategy is beneficial when scalability and high availability are crucial at both the API Gateway and backend server levels. It provides flexibility for horizontally scaling the API Gateway and ensures even distribution of requests across backend servers.

- The “Internal Load Balancer with API Gateway” strategy is suitable when the focus is primarily on API management and offloading the load balancing responsibilities to a dedicated internal Load Balancer. It simplifies the API Gateway configuration and allows it to concentrate on authentication, authorization, and other API-related tasks.

The choice between the two strategies depends on specific requirements, infrastructure setup, and the desired level of control and flexibility needed for load balancing and API management.

API gateways and load balancers are essential tools in the world of tech infrastructure. API gateways act as coordinators, simplifying user access and enhancing security, while load balancers distribute workload and improve performance. By understanding their roles and when to use each one, you can build robust and efficient systems that provide a seamless experience for users. Happy coordinating and distributing!

Learn more about different deployment strategies and how to take care of resources in production here.

0 Comments